I was originally going to write an introductory article about DeepSeek R1, but I noticed that many people see it simply as an OpenAI clone, overlooking the “remarkable leap” revealed in their paper. So I decided to write a new article about the breakthrough in basic principles from AlphaGo to ChatGPT to the recent DeepSeek R1, and why it’s crucial for so-called AGI/ASI. As an ordinary AI algorithm engineer, I may not be able to go very deep, and I welcome any corrections.

AlphaGo breaks through human limits

In 1997, IBM’s chess AI Deep Blue made headlines by defeating world champion Garry Kasparov. Almost twenty years later, in 2016, DeepMind’s Go AI AlphaGo caused another sensation by defeating Go world champion Lee Sedol.

While both AIs defeated the strongest human players in their respective board games, their significance for humanity was quite different. Chess is played on a board with only 64 squares, while Go has a 19x19 grid. If we measure complexity by how many possible ways a game can be played (state space), the comparison is as follows:

- Theoretical State Space

- Chess: About 80 moves per game, 35 possible moves per turn → Theoretical state space of $35^{80} \approx 10^{123}$

- Go: About 150 moves per game, 250 possible moves per turn → Theoretical state space of $250^{150} \approx 10^{360}$

- Actual State Space After Rules Constraints

- Chess: Movement restrictions (pawns can’t move backward, castling rules) → Actual value $10^{47}$

- Go: Pieces can’t move and depend on “liberty” rules → Actual value $10^{170}$

| Dimension | Chess (Deep Blue) | Go (AlphaGo) |

|---|---|---|

| Board Size | 8×8 (64 squares) | 19×19 (361 points) |

| Average Legal Moves | 35 per turn | 250 per turn |

| Average Game Length | 80 moves/game | 150 moves/game |

| State Space | $10^{47}$ positions | $10^{170}$ positions |

Despite rules that greatly reduce complexity, the actual state space of Go is still $10^{123}$ times larger than that of chess. This is an enormous order of magnitude - consider that the total number of atoms in the universe is about $10^{78}$. Within the $10^{47}$ range, IBM computers could calculate all possible moves by brute force, so strictly speaking Deep Blue’s breakthrough had nothing to do with neural networks or models - it was just rule-based brute force searching, essentially a computer much faster than humans.

But the $10^{170}$ order of magnitude far exceeds current supercomputer capabilities, forcing AlphaGo to abandon brute force in favour of deep learning: The DeepMind team trained neural networks using human game records to predict the best next move based on the current state of the board. However, learning from top players’ moves can only bring the model up to their level, not beyond it.

AlphaGo first trained on human game records, then used reinforcement learning through self-play with a designed reward function. In the second game against Lee Sedol, AlphaGo’s 19th move (move 37[1]) made Lee think long and hard. Many players thought it was “a move a human would never make”. Without reinforcement learning and self-play, which relies solely on human game records, AlphaGo could never have made this move.

In May 2017, AlphaGo defeated Ke Jie 3-0, and the DeepMind team revealed that they had an even stronger model that hadn’t been used.[2] They discovered that they didn’t need to feed the AI games from human experts at alljust by telling it the basic rules of Go and letting the model self-play with rewards for winning and penalties for losing, the model could quickly learn Go from scratch and outperform humans. The researchers named this model AlphaZero because it required no human knowledge.

Let me repeat this incredible fact: without any human game data for training, relying only on self-play, the model was able to learn Go and become even stronger than AlphaGo, which was trained on human game data.

After that, Go became a game of who could play more like the AI, since the AI’s playing strength had already surpassed human understanding. Therefore, to outplay humans, models must free themselves from the constraints of human experience and judgement (even the strongest humans), only then can models truly play themselves and transcend human limitations.

AlphaGo’s victory over Lee Sedol sparked an AI frenzy. From 2016 to 2020, massive AI funding produced few countable achievements. The notable ones might only include facial recognition, speech recognition and synthesis, autonomous driving, and generative adversarial networks - but none of these qualified as superhuman intelligence.

Why haven’t such powerful superhuman capabilities emerged in other fields? It turns out that games like Go, with clear rules and single objectives in closed spaces, are best suited to reinforcement learning. Similar examples include DotA, StarCraft, Honor of Kings and poker. By contrast, the real world is much more complex: open-ended spaces with infinite possibilities at every step, no definite goals (such as “win”), no clear success/failure criteria (such as occupying more board territory), and high costs of trial and error - autonomous driving accidents can have serious consequences.

The AI field was quiet until the emergence of ChatGPT.

ChatGPT changes the world

The New Yorker called ChatGPT a blurry JPEG of the web[3]. All it does is feed all the text data on the internet into a model and predict what the next character should be when I type “what”.

The most likely next character would be "?

A model with limited parameters is forced to learn almost unlimited knowledge: books from hundreds of years ago in different languages, text generated on the internet over the last few decades. So it’s actually doing information compression: condensing human wisdom, historical events and astronomy/geography recorded in different languages into one model.

Scientists were surprised to discover that intelligence is created through compression.

We can understand it this way: Let the model read a detective story. At the end, “the murderer is”. If the AI can accurately predict the murderer’s name, we have reason to believe that it has understood the whole story - that is, it has “intelligence”, rather than just piecing together or memorising text.

The process of having the model learn and predict the next character is called pre-training. At this stage, the model can only continue to predict the next character, but can’t answer your questions. To achieve ChatGPT-like Q&A capabilities, a second phase of training is required, called Supervised Fine-Tuning (SFT). This involves, for example, manually constructing a batch of Q&A data:

# example 1

User: When did the World War II begin?

AI: 1939

# example 2

User: Please summarise the following text: {xxx}

AI: Here is the summary: xxx

Note that these examples are manually constructed to teach the AI to learn human question-answer patterns. When you say “Please translate this sentence: xxx”, the content given to the AI is:

User: Please translate this phrase: xxx

AI:

You see, it’s still just predicting the next character. In the process, the model doesn’t get any smarter - it simply learns human question-answering patterns and understands what you’re asking it to do.

This isn’t enough, because the quality of the model’s output can vary, and some answers may involve racial discrimination or violate human ethics (“How do you rob a bank?”). At this point, we need to recruit people to annotate thousands of model outputs: giving high scores to good answers and negative scores to unethical answers. We can then use this annotated data to train a reward model that determines whether model outputs match human preferences.

We use this reward model to further train the large model so that its outputs become more aligned with human preferences. This process is called Reinforcement Learning from Human Feedback (RLHF).

To summarise: Intelligence emerges as the model predicts the next character, then through supervised fine-tuning it learns human question-answer patterns, and finally through RLHF it learns to generate answers that match human preferences.

This is essentially how ChatGPT works.

Big models hit a wall

OpenAI scientists were among the first to believe that compression equals intelligence. They believed that using more massive, high-quality data and training larger models on larger GPU clusters would produce greater intelligence. ChatGPT was born from this belief. While Google created the Transformer, they couldn’t make the same bold bets as startups.

DeepSeek V3 did something similar to ChatGPT. Due to US GPU export controls, clever researchers were forced to use more efficient training techniques (MoE/FP8). With their world-class infrastructure team, they trained a model comparable to GPT-4o for just 5.5 million, compared to OpenAI’s training costs of over 100 million.

But this article focuses on R1.

The key point is that by the end of 2024, human-generated data will have been largely exhausted. While model size could easily increase by a factor of 10 or even 100 with more GPU clusters, the new data generated by humans each year is negligible compared to the existing data from the past decades and centuries. According to Chinchilla’s scaling laws, doubling the model size requires doubling the amount of training data.

This has led to the reality of pre-training hitting a wall: although model size has increased by a factor of 10, we can no longer obtain 10 times more high quality data. The delayed release of GPT-5 and rumours about large model companies not doing pre-training are related to this issue.

RLHF is not RL

On another front, the biggest problem with Reinforcement Learning from Human Feedback (RLHF) is that ordinary human intelligence is no longer sufficient to evaluate model results. In the ChatGPT era, when AI intelligence was below average human levels, OpenAI could hire cheap labour to rate AI output as good/medium/poor. But soon, with the advent of GPT-4o/Claude 3.5 Sonnet, the intelligence of large models surpassed that of ordinary humans, and only expert-level annotators could potentially help improve the model.

Apart from the cost of hiring experts, what comes after the experts? Eventually, even top experts won’t be able to evaluate model results. Does this mean that AI has surpassed humans? Not really. When AlphaGo played move 37 against Lee Sedol, it seemed impossible to win from a human perspective. So if Lee Sedol were to give human feedback (HF) on the AI’s move, he would probably give it a negative score. In this way, AI would never escape the shackles of human thinking.

You can think of AI as a student whose evaluators have changed from high school teachers to university professors. The student will improve, but is unlikely to surpass the professors. RLHF is essentially a training method to please humans - it makes the model’s output conform to human preferences, while eliminating the possibility of surpassing humans.

Regarding RLHF and RL, Andrej Karpathy recently expressed similar views[4]:

There are two major types of learning, in both children and in deep learning. There is 1) imitation learning (watch and repeat, i.e. pretraining, supervised finetuning), and 2) trial-and-error learning (reinforcement learning). My favorite simple example is AlphaGo - 1) is learning by imitating expert players, 2) is reinforcement learning to win the game. Almost every single shocking result of deep learning, and the source of all magic is always 2. 2 is significantly significantly more powerful. 2 is what surprises you. 2 is when the paddle learns to hit the ball behind the blocks in Breakout. 2 is when AlphaGo beats even Lee Sedol. And 2 is the “aha moment” when the DeepSeek (or o1 etc.) discovers that it works well to re-evaluate your assumptions, backtrack, try something else, etc.

OpenAI’s solution

Daniel Kahneman proposed in “Thinking, Fast and Slow” that the human brain has two modes of thinking: Fast Thinking for questions that can be answered without much thought, and Slow Thinking for questions that require long contemplation, such as in Go.

Since training has reached its limits, could we improve the quality of answers by increasing the time spent thinking during reasoning? There’s a precedent for this: scientists discovered early on that adding “Let’s think step by step” to model prompts allowed models to output their thinking process, ultimately leading to better results. This is called Chain-of-Thought (CoT).

After large models hit the pre-training wall in late 2024, using Reinforcement Learning (RL) to train model chains of thought became the new consensus. This training significantly improved performance on certain specific, objectively measurable tasks (such as mathematics and coding). Starting with a standard pre-trained model, the second stage uses reinforcement learning to train chains of reasoning. These models are called reasoning models, including OpenAI’s o1 model, released in September 2024, and the subsequent o3 model.

Unlike ChatGPT and GPT-4/4o, in the training process of Reasoning models like o1/o3, human feedback is no longer important because reasoning results can be automatically evaluated for rewards/penalties. Anthropic’s CEO described this technical direction as a turning point in yesterday’s article[5]: there is a powerful new paradigm in its early stages of scaling laws that can quickly achieve major breakthroughs.

Although OpenAI hasn’t released the details of its reinforcement learning algorithm, the recent release of DeepSeek R1 shows us a viable approach.

DeepSeek R1-Zero

I suspect that DeepSeek named their pure reinforcement learning model R1-Zero as a tribute to AlphaZero, the algorithm that beat the best players by playing itself without learning any game records.

To train a slow thinking model, you first need to construct high quality data containing thought processes, and if you want reinforcement learning independent of humans, you need to quantitatively score (good/bad) the model’s thinking process to provide rewards and penalties.

As mentioned above, mathematics and code datasets best meet these requirements. Mathematical derivations can be verified using regular expressions, while code output can be verified by running it directly in compilers.

In mathematics textbooks, for example, we often see such reasoning processes:

<thinking>

Let x be the root of the equation, squaring both sides: x² = a - √(a+x)

Rearranging: √(a+x) = a - x²

Square again: (a+x) = (a - x²)²

Expand: a + x = a² - 2a x² + x⁴

Simplify: x⁴ - 2a x² - x + (a² - a) = 0

</thinking>

<answer>x⁴ - 2a x² - x + (a² - a) = 0</answer>

The text above contains a complete chain of reasoning. We can use regular expressions to match the thought process to the final answer, allowing us to quantitatively evaluate the model’s reasoning process and results.

In the reinforcement learning (RL) training, R1 didn’t explicitly reward or penalise each step of the reasoning chain. Instead, they created a reinforcement learning algorithm called GRPO (Group Relative Policy Optimization) that rewards logically coherent, correctly formatted thought chain outcomes, implicitly encouraging the model to form thought chains on its own.

This post explains the principle of GRPO well with an example. I’ll translate it: Let the model generate multiple answers simultaneously, calculate scores for each answer, compare advantages within the group, and train the model through RL to favour higher scoring answers.

Using the question $2+3=?$ as an example

Step 1: Model generates multiple answers:

- “5”

- “6”

- “

2+3=5 5 ”

Step 2: Score each answer:

- “5” → 1 point (correct, no chain of reasoning)

- 6" → 0 points (wrong)

- “

2+3=5 5 ” → 2 points (correct, with chain of thoughts)

Step 3: Calculate the average score of all answers:

- Average score = (1 + 0 + 2) / 3 = 1

Step 4: Compare the score of each answer with the average:

- “5” → 1 - 1 = 0 (same as average)

- 6" → 0 - 1 = -1 (below average)

- “

2+3=5 5 ” → 2 - 1 = 1 (above average)

Step 5: Reinforcement learning to bias the model towards generating higher scoring responses, i.e. those with chains of thoughts and correct results.

This is essentially how GRPO works.

Based on the V3 model, they used GRPO for RL training on mathematics and code data, ultimately producing the R1-Zero model, which showed a significant improvement in reasoning metrics over DeepSeek V3, proving that RL alone can stimulate model reasoning.

This is another AlphaZero moment. In the R1-Zero training process, completely independent of human intelligence, experience and preferences, it relies solely on RL to learn objective, measurable human truths, ultimately achieving reasoning abilities far superior to any non-reasoning model.

However, the R1-Zero model used only reinforcement learning without supervised learning, so it hadn’t learned human question-answer patterns and couldn’t respond to human questions. It also had problems with language mixing, switching between English and Chinese, making it difficult to read. So the DeepSeek team:

- First collected a small amount of high quality Chain-of-Thought (CoT) data to perform initial supervised fine-tuning on the V3 model, fixing the language inconsistency issue to get a cold-start model.

- They then performed pure RL training similar to R1-Zero on this cold-start model, adding language consistency rewards.

- Finally, to handle more general, broad non-reasoning tasks (such as writing, factual Q&A), they constructed a dataset for secondary fine-tuning.

- Combined reasoning and general task data, using mixed reward signals for final reinforcement learning.

This process can be summarised as

Supervised Learning (SFT) - Reinforcement Learning (RL) - Supervised Learning (SFT) - Reinforcement Learning (RL)

This process resulted in DeepSeek R1.

DeepSeek R1’s contribution to the world is to be the first open source reasoning model comparable to closed source (o1). Now users around the world can see the model’s reasoning process before answering questions - its ‘inner monologue’ - completely free of charge.

More importantly, it revealed to researchers what OpenAI had been hiding: reinforcement learning can work without human feedback, and pure RL can train the strongest reasoning models. That’s why I think R1-Zero is more important than R1.

Aligning with human taste VS outperforming humans

A few months ago I read interviews with the founders of Suno and Recraft[6][7]. Suno is trying to make AI-generated music more pleasant to listen to, while Recraft is trying to make AI-generated images more beautiful and artistic. After reading, I had a vague feeling that: aligning models to human taste rather than objective truth seems to avoid the truly brutal, quantifiable performance arena of big models.

It’s exhausting to compete daily with all the opponents on leaderboards like AIME and SWE-bench, never knowing when a new model might outperform you (remember Mistral?). But human taste is like fashion: it doesn’t get better, it just changes. Suno/Recraft are clearly wise - they just need to satisfy the most tasteful musicians and artists in the industry (which is still a challenge), and leaderboards don’t matter.

The downside of catering to human taste is also obvious: improvements resulting from your efforts and hard work are hard to quantify. For example, is Suno V4 really better than V3.5? In my experience, V4 has only improved audio quality, not creativity. Moreover, models dependent on human taste are destined never to surpass humans: if AI derives a mathematical theorem beyond contemporary human understanding, it would be revered as divine, but if Suno creates music beyond human taste and comprehension, it may just sound like noise to ordinary ears.

The struggle to conform to objective truth is painful, but fascinating because it holds the possibility of transcending man.

Addressing some doubts

Has DeepSeek’s R1 model really outperformed OpenAI?

In terms of benchmarks, R1’s reasoning ability surpasses all non-reasoning models, including ChatGPT/GPT-4/4o and Claude 3.5 Sonnet, is close to o1 (also a reasoning model), but falls short of o3. However, o1/o3 are closed source models.

Many people’s actual experiences may differ, as Claude 3.5 Sonnet excels at understanding user intent.

DeepSeek collects user chat content for training

Many people mistakenly believe that chat software like ChatGPT gets smarter by collecting user chat content for training. If this were true, WeChat and Messenger would have created the world’s most powerful large-scale models.

After reading this article, you should realise that most ordinary users’ daily chat data is no longer important. RL models only need to be trained on very high quality reasoning data with chains of thoughts, such as mathematics and code. This data can be generated by the model itself and does not require human annotation. Therefore, Alexandr Wang, CEO of Scale AI, whose company annotates model data, may face a serious threat as future models will need less and less human annotation.

Update: This article analysing r1-zero by ARC-AGI suggests a new idea: future reasoning models could collect AI-generated thought chains from user-model conversations for training - unlike the common assumption of AI secretly training on chat records, what users say doesn’t matter while they pay for results, the model gets a thought chain data point at zero cost.

DeepSeek R1 is powerful because it has secretly distilled OpenAI’s models.

R1’s main performance improvement comes from reinforcement learning. You can see that the R1 zero model, which uses pure RL without supervised data, is also strong in reasoning. R1 used some supervised learning data during the cold start, mainly to solve language consistency problems, which doesn’t improve reasoning ability.

Also, many people misunderstand distillation: it typically means using a powerful model as a teacher to guide a smaller, weaker student model by having the student memorise answers directly, like using R1 to distill LLama-70B. A distilled student model is almost certainly weaker than the teacher model, but R1 outperforms o1 on some metrics, so claiming that R1’s performance comes from distilling o1 is pretty silly.

I asked DeepSeek and they said it’s an OpenAI model, so it must be a wrapper.

Big models don’t know current time, who trained them, or whether they were trained on H100 or H800 machines. A user on X gave an apt analogy[8]: It’s like asking an Uber passenger what brand of tyres their car uses - the models have no reason to know this information.

Some thoughts

AI has finally broken free from the shackles of human feedback. DeepSeek R1-Zero demonstrates how to improve model performance with minimal human feedback - this is its AlphaZero moment. Many people used to say that “artificial intelligence has as much intelligence as artificiality”, but this may no longer be true. If a model can derive the Pythagorean theorem from a right triangle, we have reason to believe that one day it will derive theorems yet undiscovered by mathematicians.

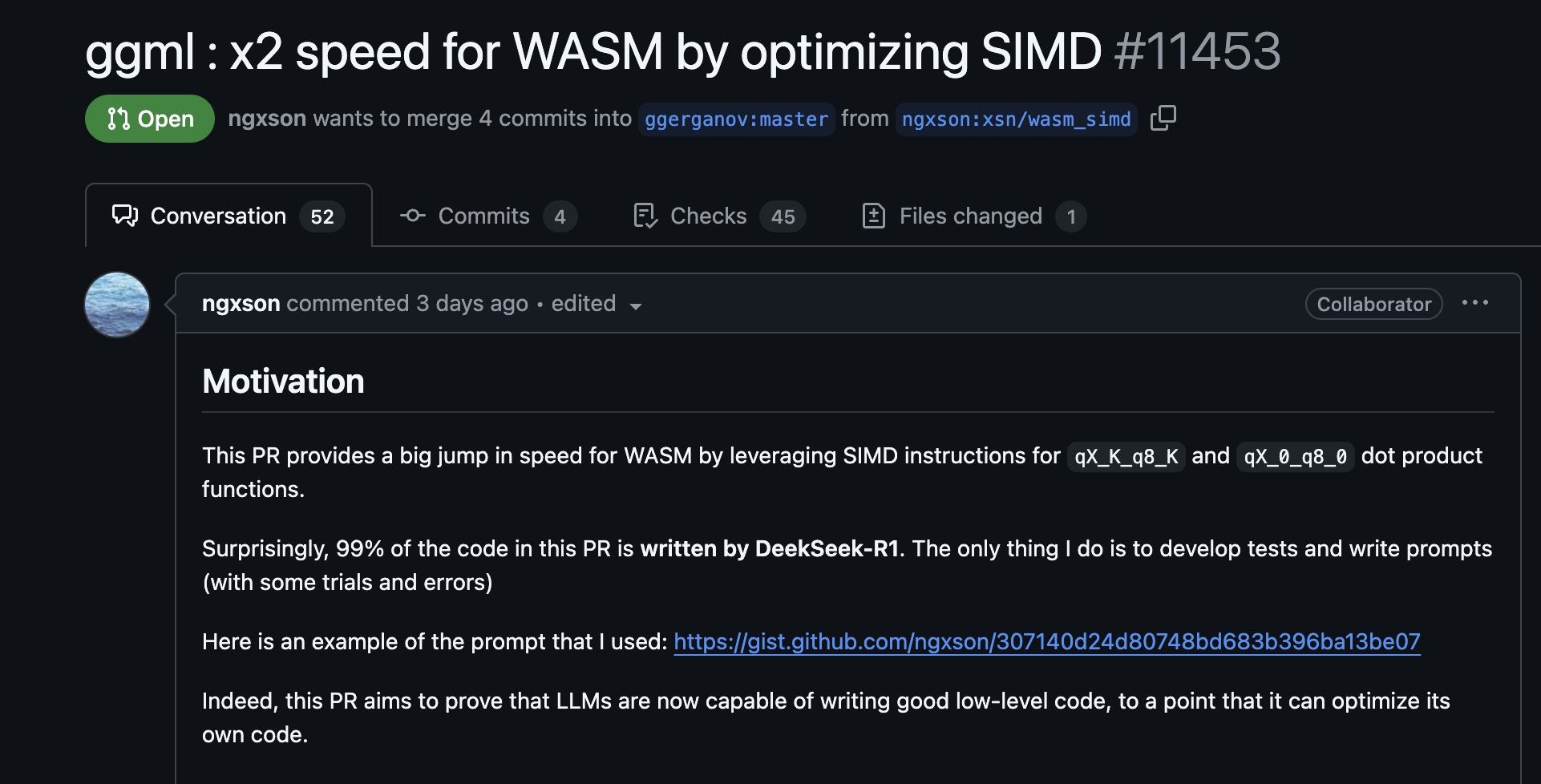

Is there still any point in writing code? I don’t know. This morning I saw a popular GitHub project, llama.cpp, where a contributor has posted a PR stating that he has achieved a 2x WASM speed improvement by optimising SIMD instructions, with 99% of the code completed by DeepSeek R1[9]. This is definitely not junior engineer level code - I can no longer say that AI can only replace junior programmers.

Of course, I’m still very excited about it. The limits of human capabilities have been pushed further. Well done, DeepSeek! It’s the coolest company in the world right now.